" | mailx -vvv -s "report" -r -S smtp="smtp" = instance

#AWS POSTGRESQL TABLE CSV INSTALL#

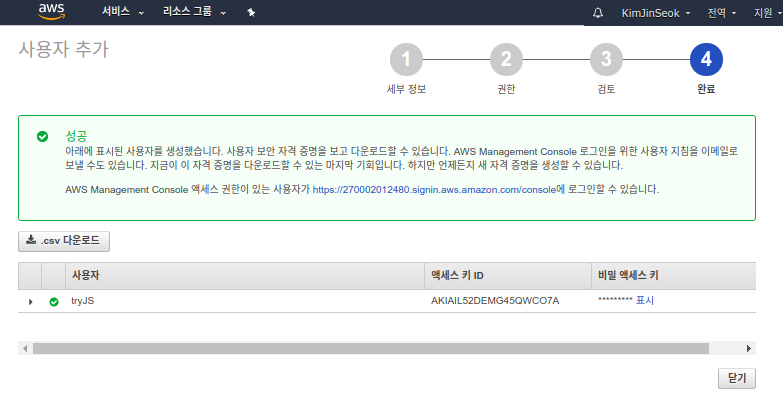

Pwd = ssm_client.get_parameter(Name='dbpassword', WithDecryption=True)įor reservation in response:įor instance in reservation:Ĭommands = then export HOME="$(cd ~ & pwd)" fi','sudo yum install mail -y', 'sudo yum install postgresql96 -y', '#!/bin/bash ', 'PGPASSWORD='+str(password)+' psql -h postgres -U '+str(username)+' -d dbname -c "\copy (select * from report where date= '+os.environ+' and code= '+os.environ+') to stdout csv header">/$HOME/s3Reportqa.csv', 'aws s3 cp /$HOME/s3Report.csv s3://'+os.environ+'/', 'printf "Hi All, csv file has been generated successfully. Uname = ssm_client.get_parameter(Name='dbusername', WithDecryption=True) For more information about parameter store you may refer here.ĭef execute_ssm_command(client, commands, instance_ids):

I retreived username and password from parameter store. Another approach is use pandas module and dataframe to convert the data to csv and push it to s3.īoth the examples are as below. There are multiple ways we can achieve this, one is to use ssm command send over as shell script and use copy command for postgreSQL to generate csv file and push it to s3. How can you generate csv file and upload to s3 bucket ? There is no gateway to connect to PostgreSQL instance from power-bi, hence we need to have a mechanism to upload the data to s3 so that powerbi can import it and generate reports. Powerbi connects to s3 url and generate report. One of the requirement was to generate csv file for set of queries from RDS PostgreSQL and upload the csv file to s3 bucket for power bi reporting.

0 kommentar(er)

0 kommentar(er)